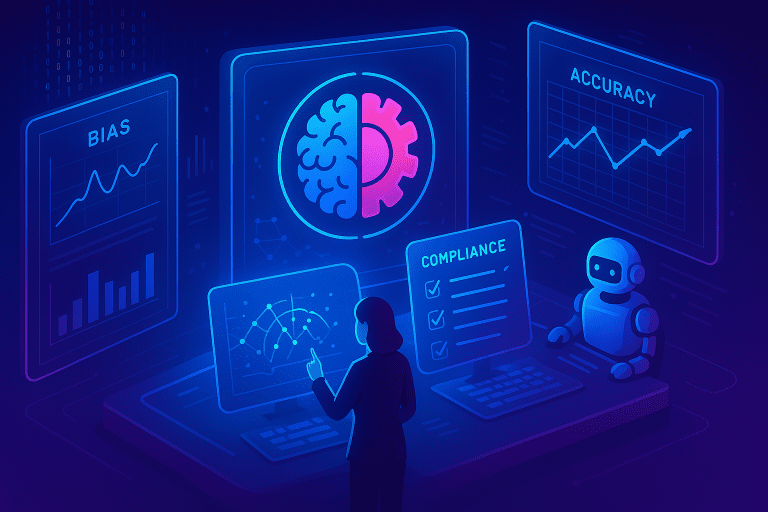

AI Model Auditing & Validation

AI Model Auditing & Validation – Ensuring Accuracy, Fairness, and Regulatory Confidence

As AI systems become central to decision-making in critical sectors, the integrity of their performance becomes a matter of both operational success and ethical responsibility. AI Model Auditing & Validation is the process of rigorously testing, evaluating, and certifying AI models to ensure they perform as intended—accurately, fairly, securely, and in full compliance with evolving regulatory frameworks.

Why It’s Essential

AI models are not static. They learn, adapt, and sometimes drift from their original accuracy. Without continuous oversight, this can lead to biased outcomes, security vulnerabilities, or legal non-compliance. Auditing and validation act as a quality assurance framework, detecting issues early and ensuring sustained trust.

Core Principles of Model Auditing

The Auditing Process

Sector-Specific Applications

Benefits of Comprehensive Auditing

The Future of AI Auditing

Bottom Line:

AI Model Auditing & Validation isn’t just about compliance—it’s about building AI systems that are reliable, fair, and trusted. Organizations that adopt robust auditing frameworks position themselves as leaders in responsible AI deployment, gaining a competitive advantage in an increasingly regulated and ethically aware marketplace.

Perpetual Orchestration Engine, powering the future of all million+-token, AI tech-Stacks.”

© Copyright 2025 CHICAMUS AI Systems Inc.

We use cookies to improve your experience on our site. By using our site, you consent to cookies.

Manage your cookie preferences below:

Essential cookies enable basic functions and are necessary for the proper function of the website.

These cookies are needed for adding comments on this website.

Statistics cookies collect information anonymously. This information helps us understand how visitors use our website.

Google Analytics is a powerful tool that tracks and analyzes website traffic for informed marketing decisions.

Service URL: policies.google.com (opens in a new window)

Marketing cookies are used to follow visitors to websites. The intention is to show ads that are relevant and engaging to the individual user.